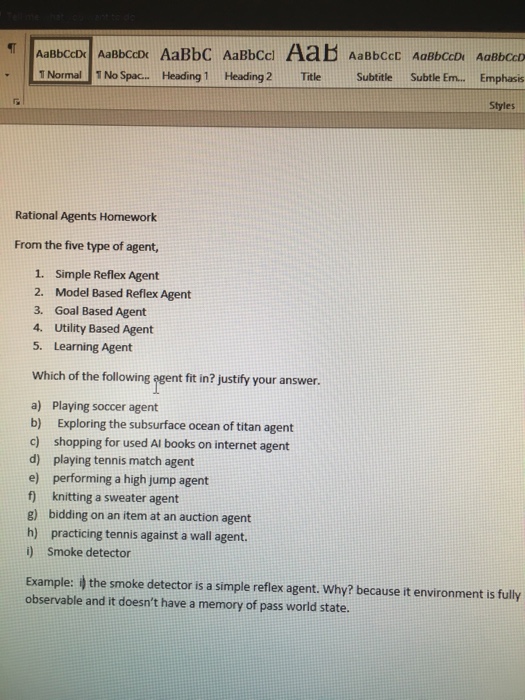

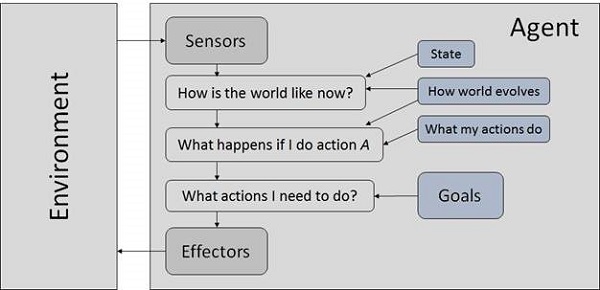

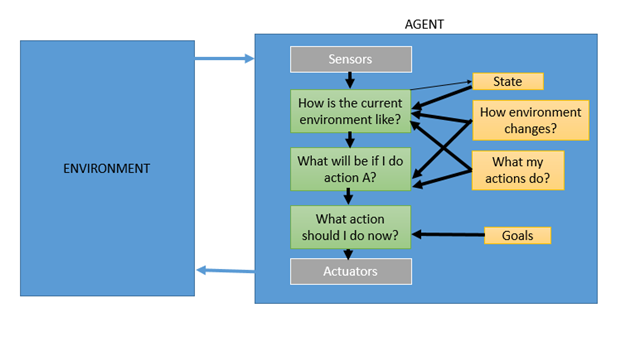

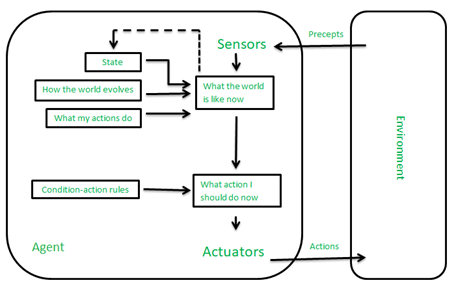

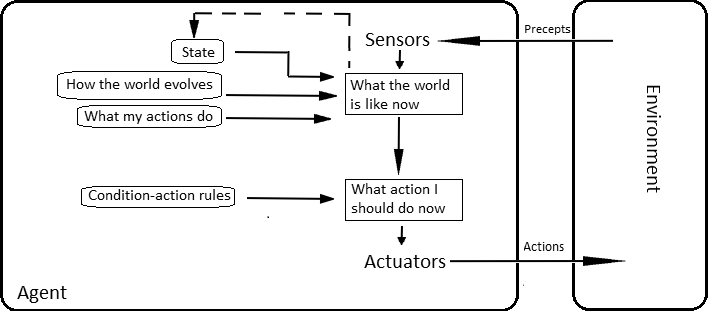

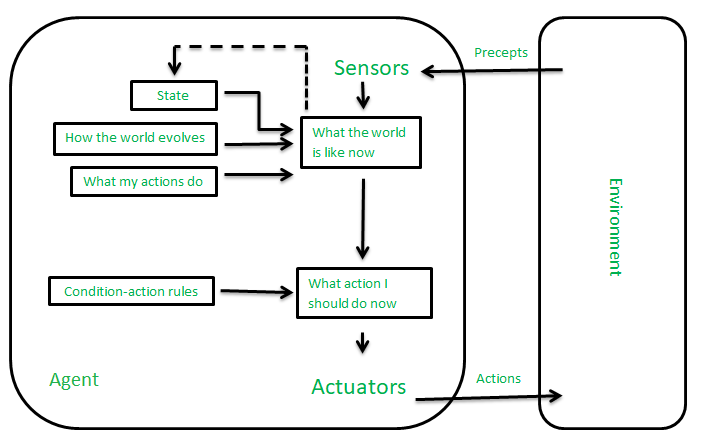

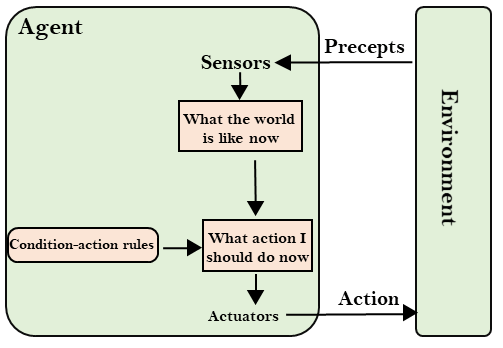

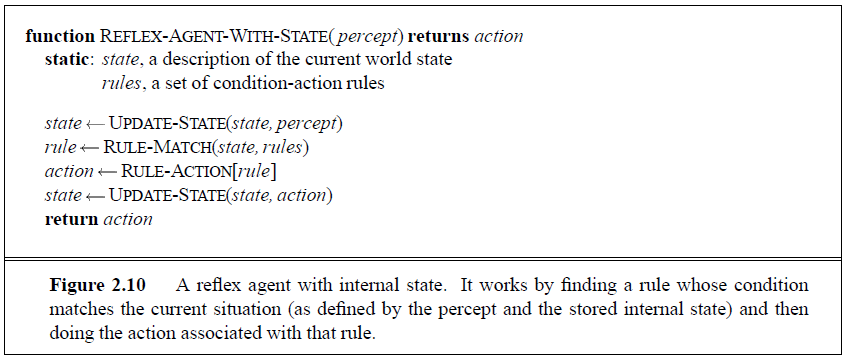

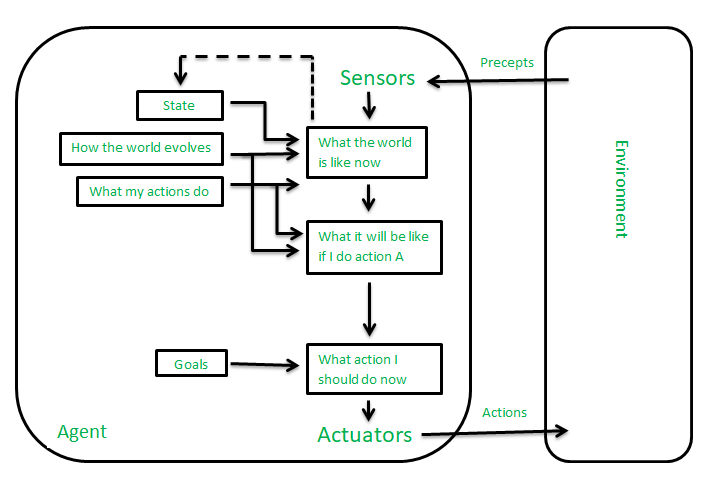

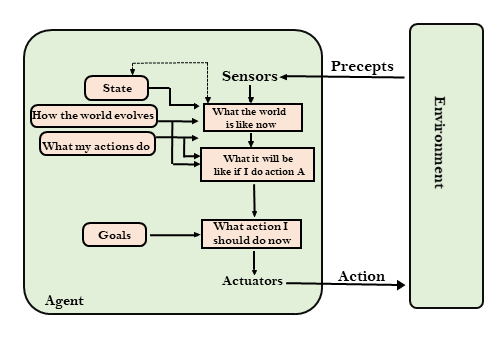

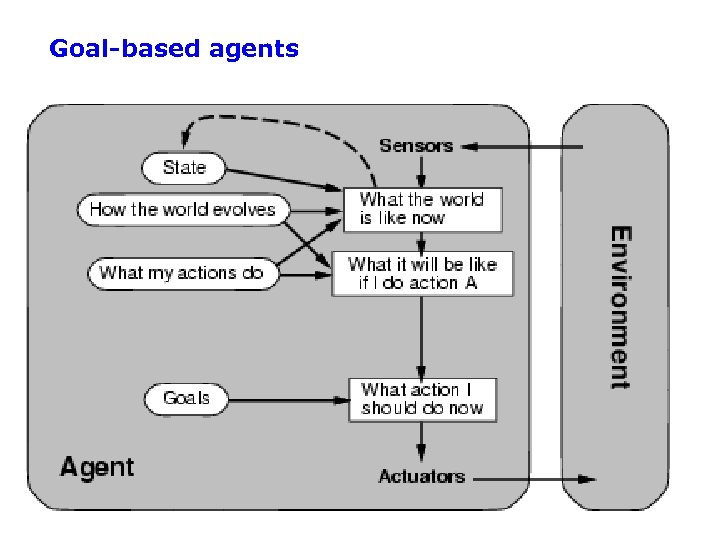

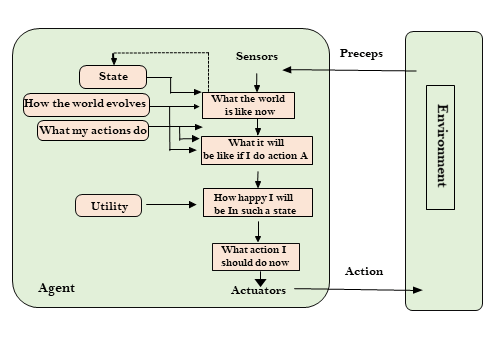

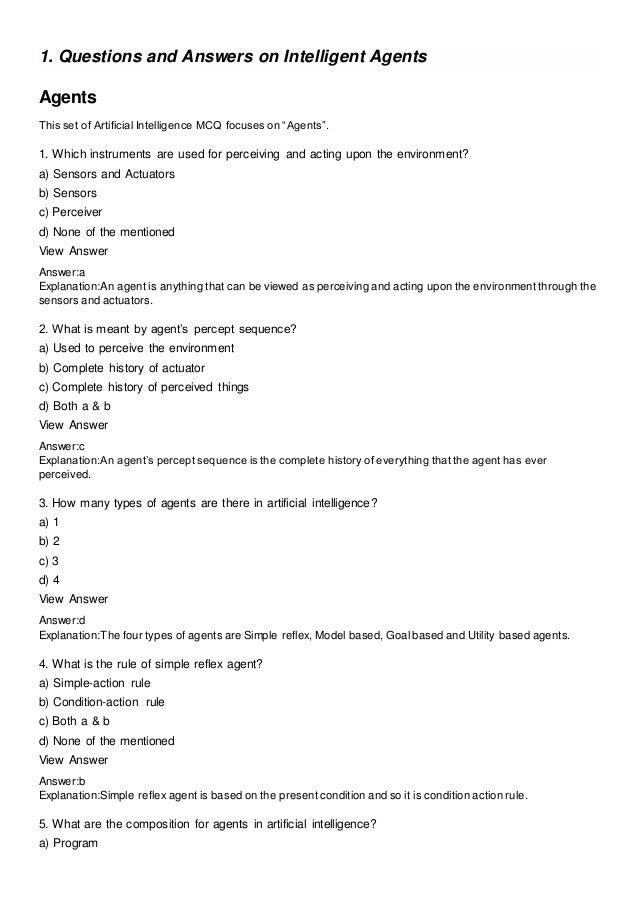

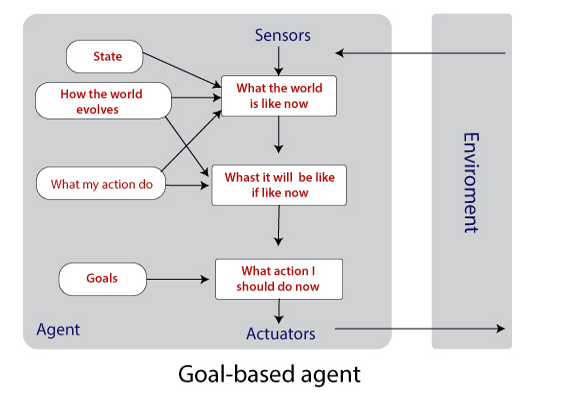

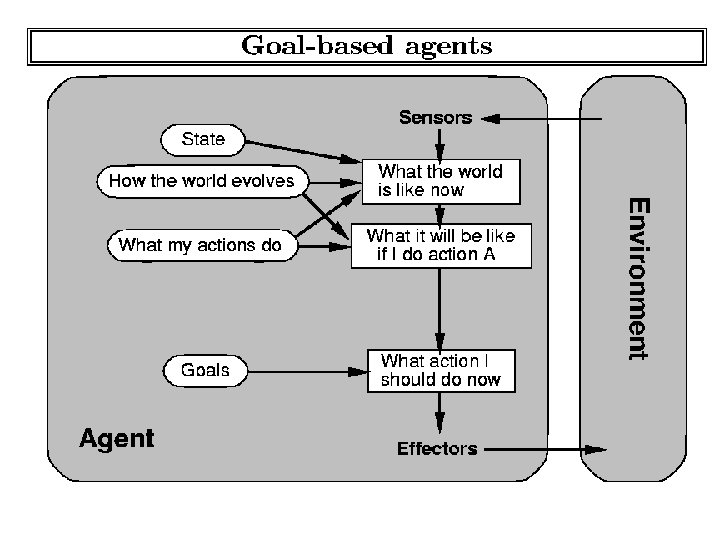

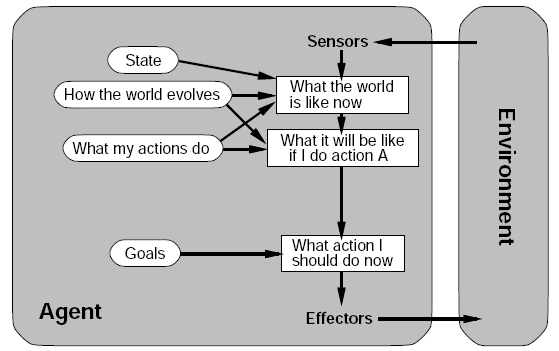

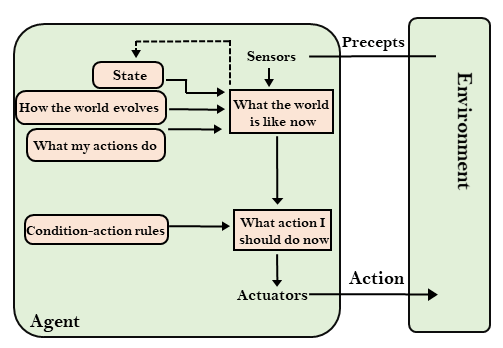

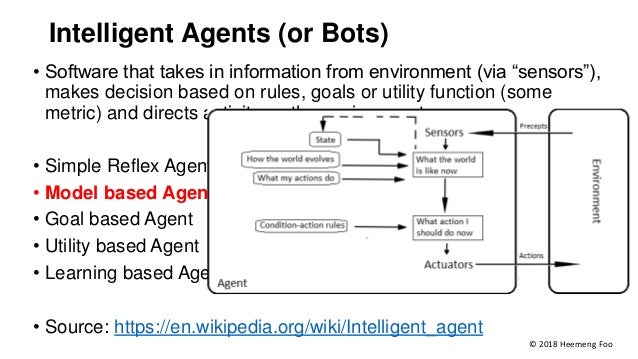

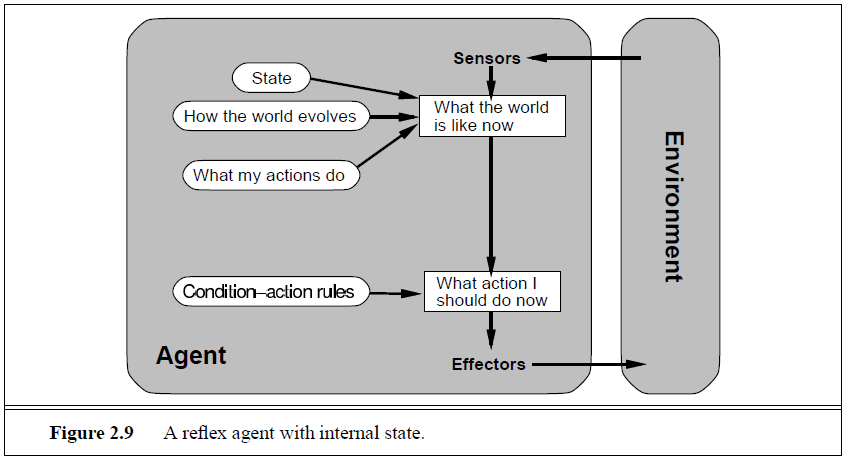

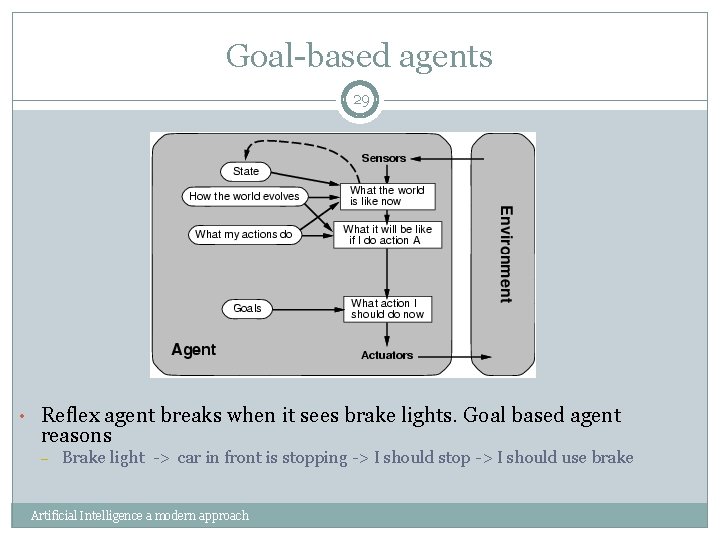

Agent Frameworks GoalBased Agents 1 Agent Sensors Effectors Goals What action I should do now Environment State How world evolves What my actions do What world is like now What it will be like if I do action A Agent Frameworks GoalBased Agents 2 Implementation and Properties • Instantiation of generic skeleton agent Figure 211A simplex reflex agent takes actions based on current situational experiences For example, if you set your smart bulb to turn on at some given time, let's say at 9 pm, the bulb won't recognize how the time is longer simply because that's the rule defined it followsReflex, model based, goal based, and utility agent Simple Reflex agents can be programmed using ifelse rules where the consequent can be a single statement or function that embodies a behavior Intrinsic to this agent is that there are no set goals, and previous states are not remembered A model based agent knows its previous state but still

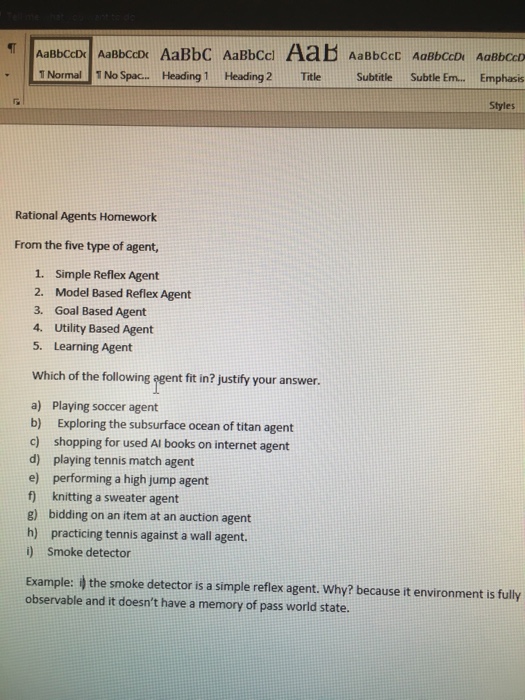

Solved From The Five Type Of Agent Simple Reflex Agent Chegg Com

Reflex and goal-based agents - making decisions

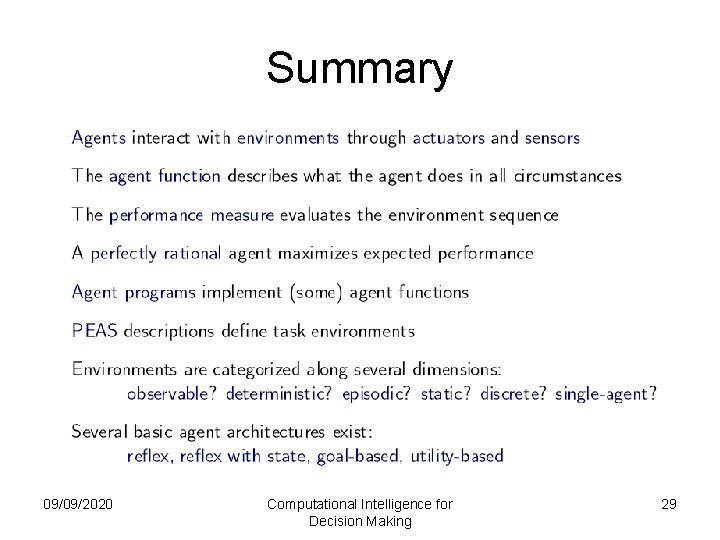

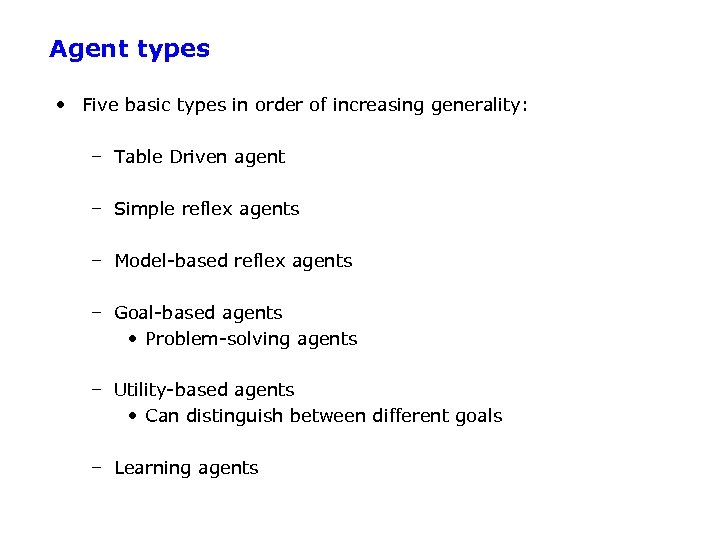

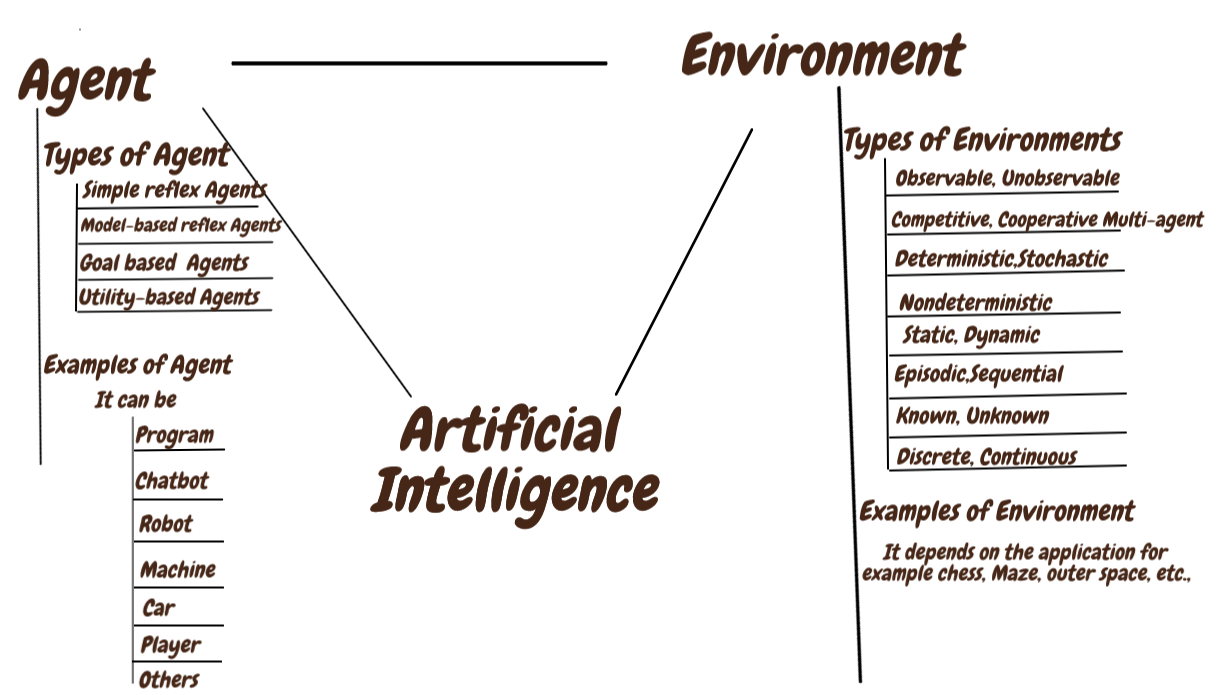

Reflex and goal-based agents - making decisions-Goal based agent is one which choose its actions in order to achieve goals It is a problem solving agent and is more flexible than model reflex agentGoal based agent consider the future actionsAgent Types Four basic types in order of increasing generalization 1 Simple reflex agents 2 Reflex agents with state/model 3 Goalbased agents 4 Utilitybased agents

Agents In Artificial Intelligence Geeksforgeeks

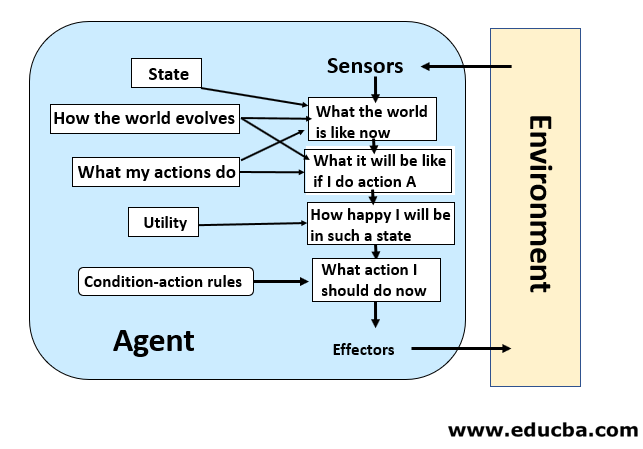

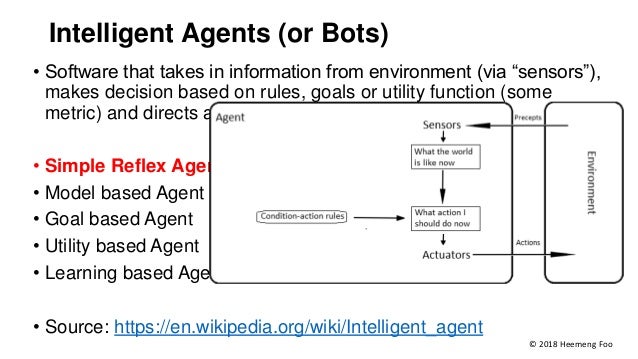

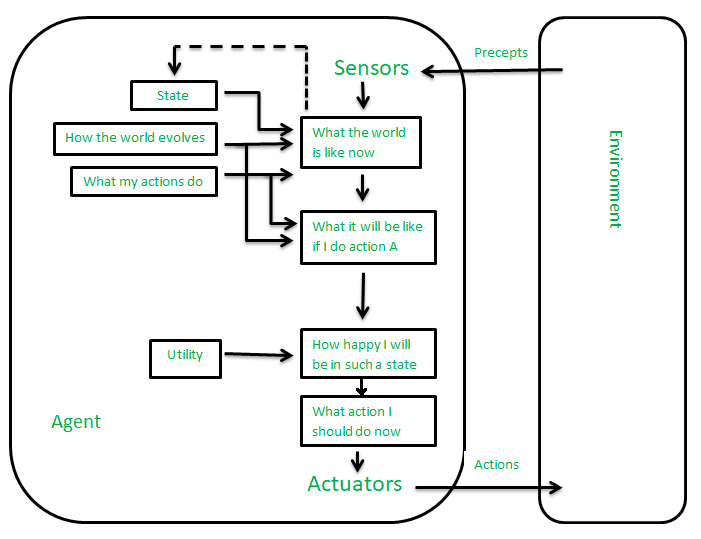

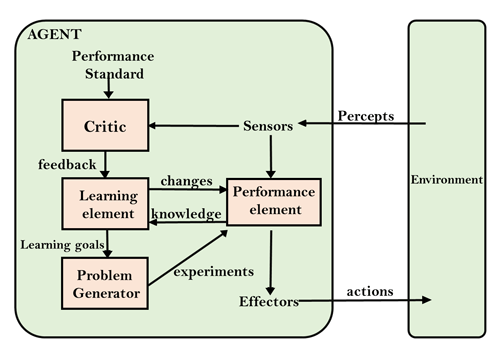

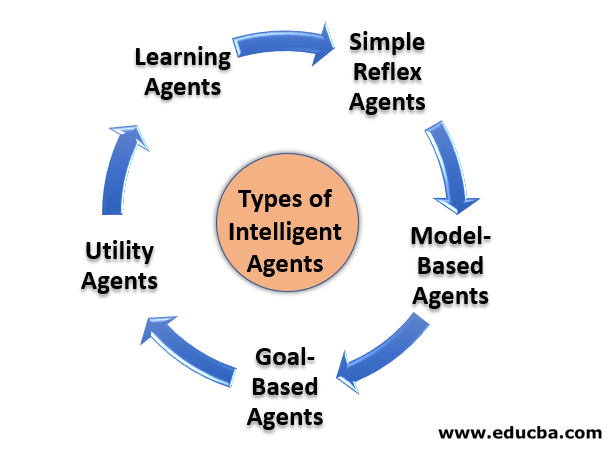

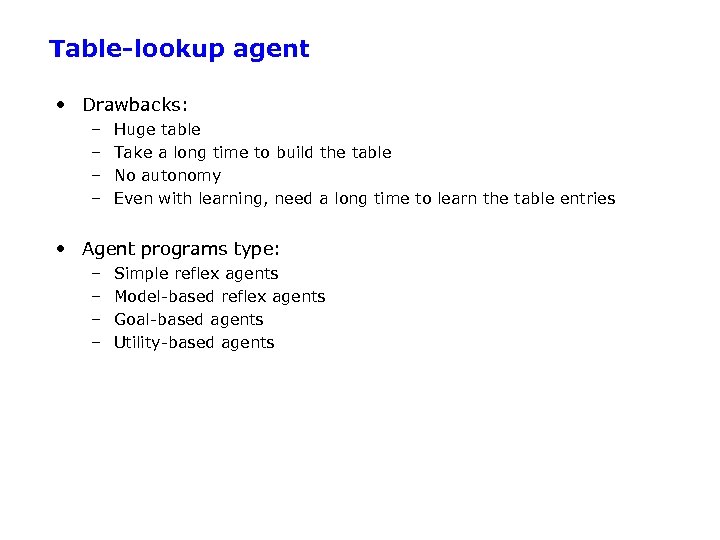

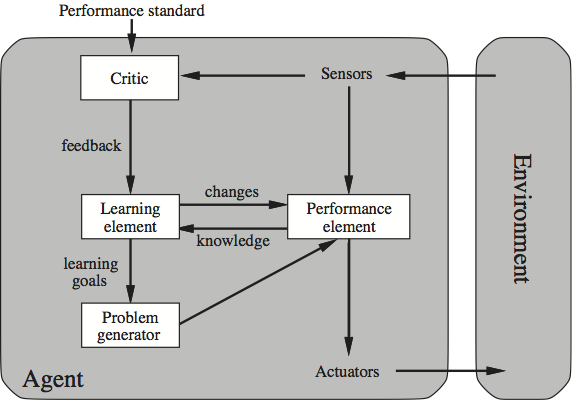

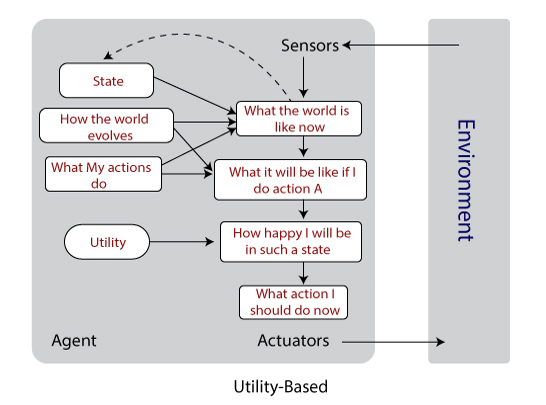

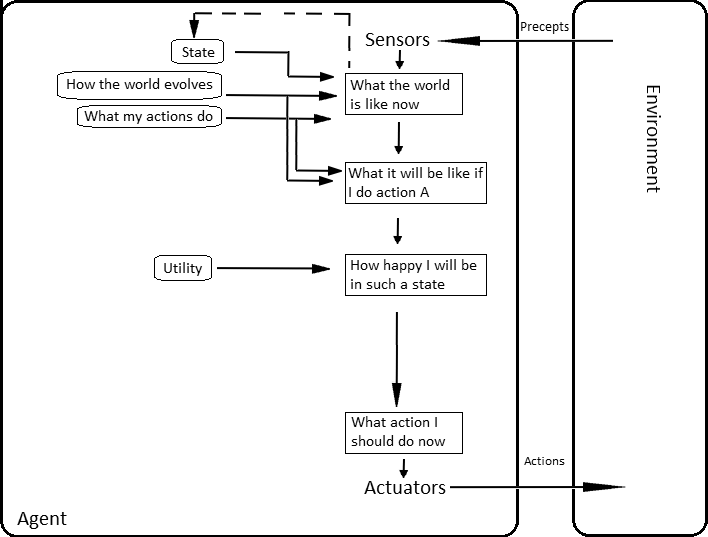

Goalbased agents and Utilitybased agents has many advantage in terms of flexibility and learning Utility agents make rational decisions when goals are inadequate 1) The utility function specifies the appropriate trade off 2) Utility provides likelihood of success can be weighted against the importance of the goals Types of agents Based on the way an agent handles a request or takes an action upon perceiving its environment, intelligent agents can be classified into four categories Simple reflex agents Agents keeping track of the World Goal based agents Utility based agents We shall discuss each one of them in brief details Simple reflex agentsLearning agent able to learn and adapt the new decisionmaking capabilities based on experience 1

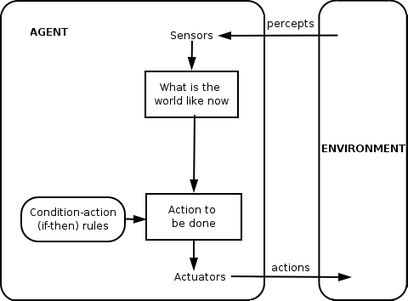

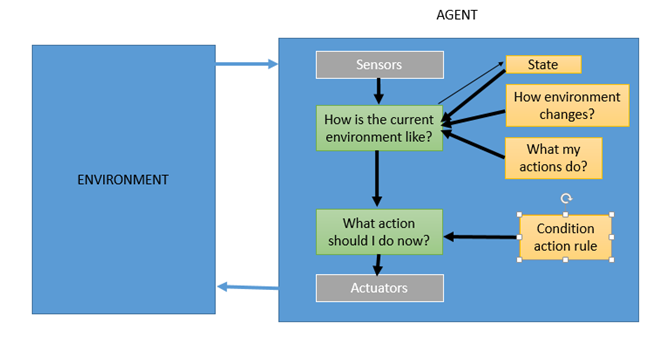

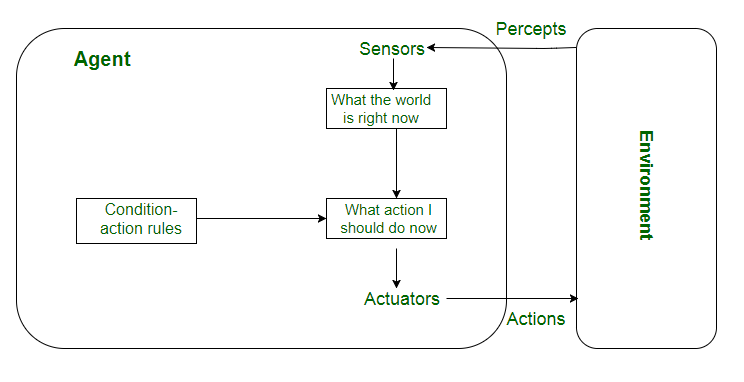

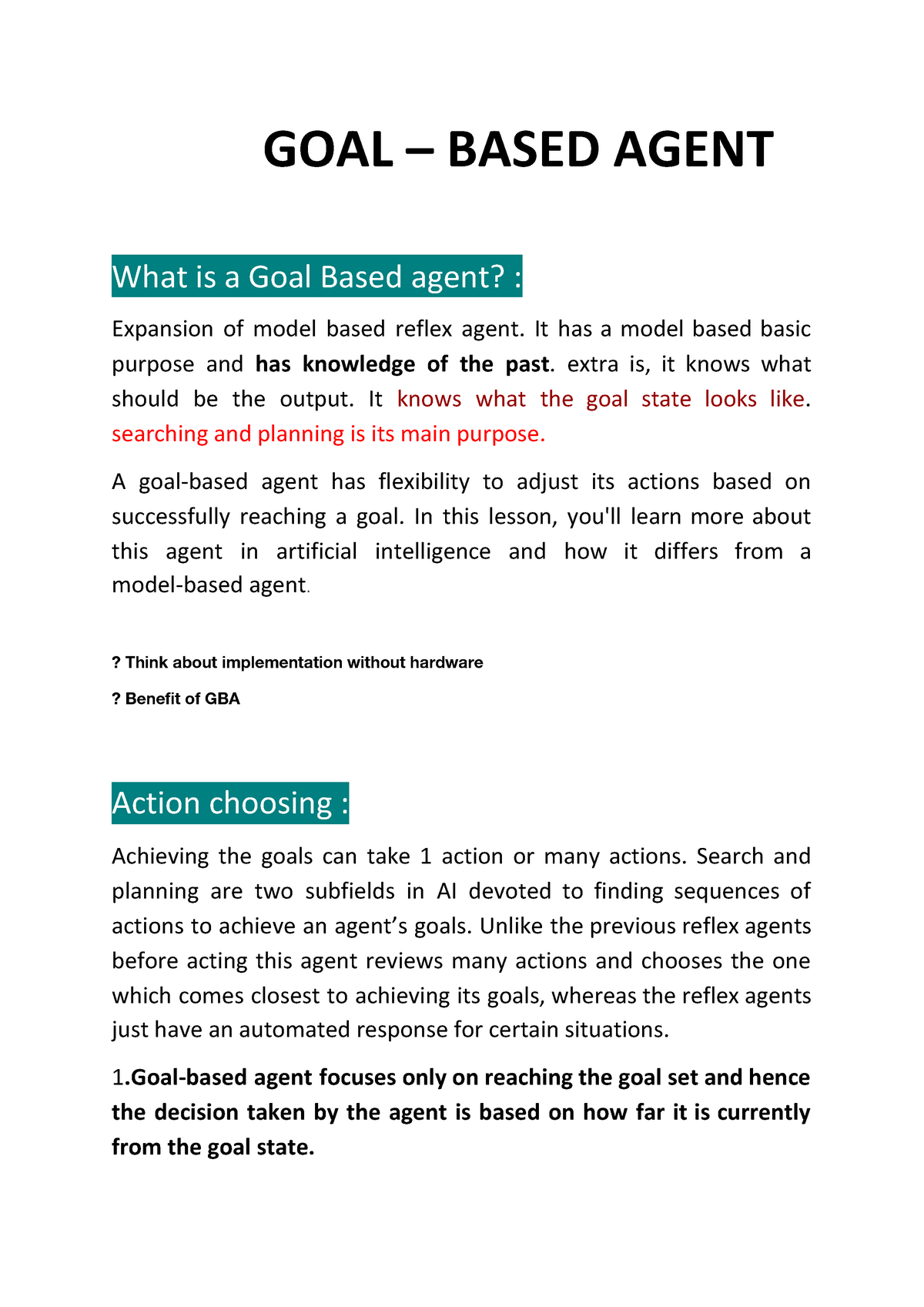

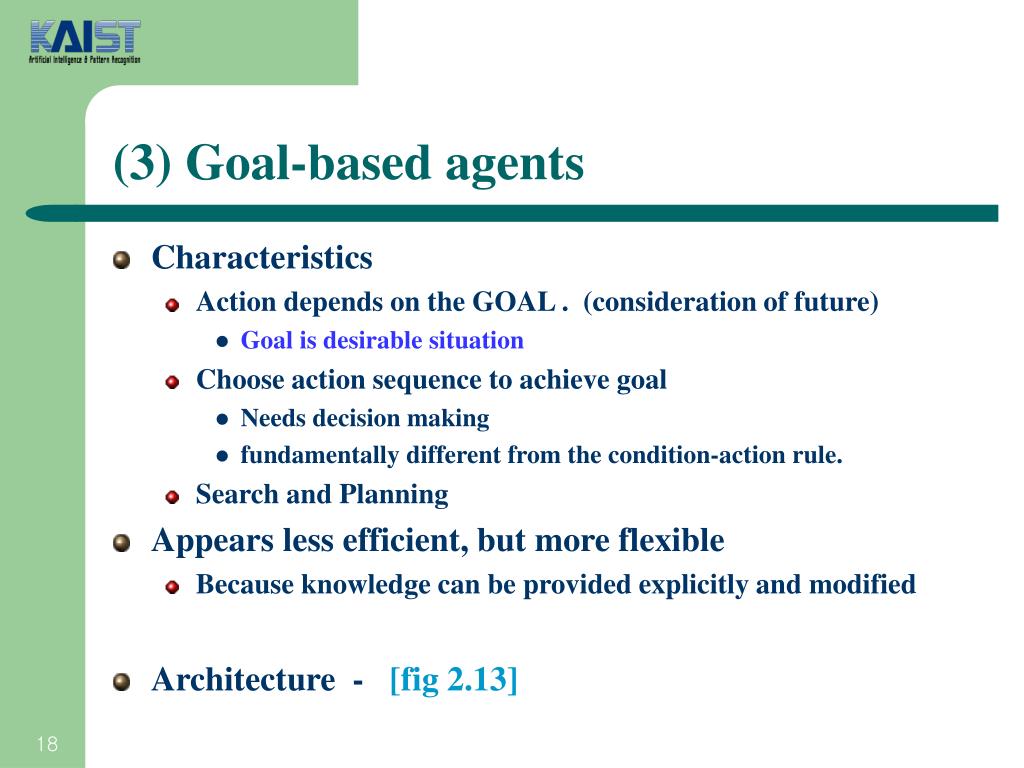

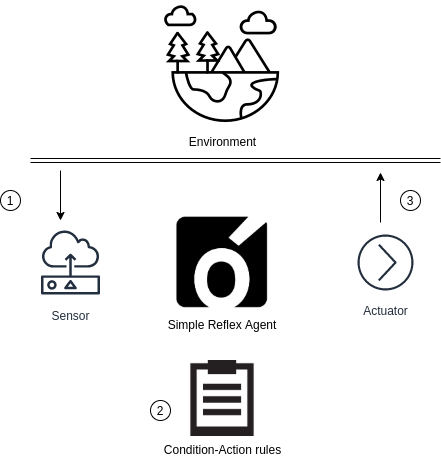

At other times, however, the agent must consider also search and planning Decision making of this latter kind involves consideration of the future Goal based agents are commonly more flexible than reflex agents Goalbased agents It is not sufficient to have the current state information unless the goal is not decided Therefore, a goalbased agent selects a way among multiple possibilities that helps it to reach its goal Note With the help of searching and planning (subfields of AI), it becomes easy for the Goalbased agent to reach its destination Simple reflex agents act only on the basis of the current percept, ignoring the rest of the percept history The agent function is based on the conditionaction rule 'if condition, then action' This agent function only succeeds when the environment is fully observable

A Simple Reflex Agent is typically employed when all the information of the current game state is directly observable, (eg Chess, Checkers, Tic Tac Toe, ConnectFour) and the decision regarding the move only depends on the current state That is, when the agent does not need to remember any information of the past state to make a decisionUtilitybased agent Explanation Utilitybased agent uses an extra component of utility that provides a measure of success at a given state It decides that how efficient that state to achieve the goal, which specifies the happiness of the agent3 Goalbased agents Answer A goalbased agent has an agenda, you might say It operates based on a goal in front of it and makes decisions based on how best to reach that goal Unlike a simple reflex agent that makes decisions based solely on the current environment, a goalbased agent is capable of thinking beyond the present moment to decide the best actions to take in

Agents In Artificial Intelligence Coding Ninjas Blog

Types Of Agents In Artificial Intelligence Skilllx

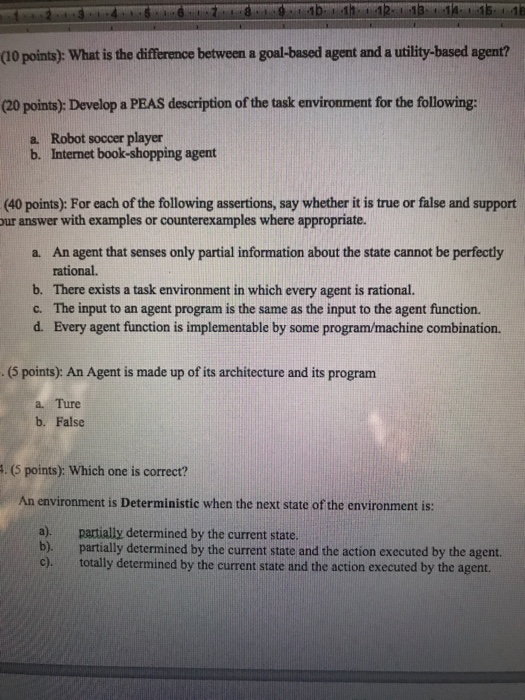

Occasionally , goal based action selection is straightforward (eg follow the acti on that leads directly to the goal);0433 GoalBased Agent Correct action depends upon what the agent is trying to accomplish Agents knowledge (model) Current state (percepts) What it is trying to achieve (goals) Select actions that will accomplish goals Often need to do search and planning to determine which action to take in a given situationSimple Reflex Agent These agents take decisions supported the present percepts and ignore the remainder of the percept history These agents only achieve a fully observable environment The Simple reflex agent doesn't consider any a part of percepts history during their decision and action process

Chapter 2 Intelligent Agents Chapter 2 Intelligent Agents

Section 02

Utilitybased agents • In two kinds of cases, goals are inadequate but a utilitybased agent can still make rational decisions • First, when there are conflicting goals, only some of which can be achieved (for example, speed and safety), the utility function specifies the appropriate tradeoff • Second, when there are several goals that • Agent program The agent program implements the agent function • Rationality Rationality is the property of an agent that chooses action to be performed • Autonomy Autonomy is a property of an agent being itself and making decisions of its own • Reflex agent A reflex agent is an agent which selects actions on the basis of current perceptThe current decision presented to the agent could affect all future decisions Dynamic Environment An environment that can change while the agent is thinking Modelbased reflex agents 3) Goalbased agents 4) Utilitybased agents The simplest kind of agent is the _____ Simple Reflex Agent

Seg 4560 Computational Intelligence For Decision Making Chapter

Chapter 2 Intelligent Agents Chapter 2 Intelligent Agents

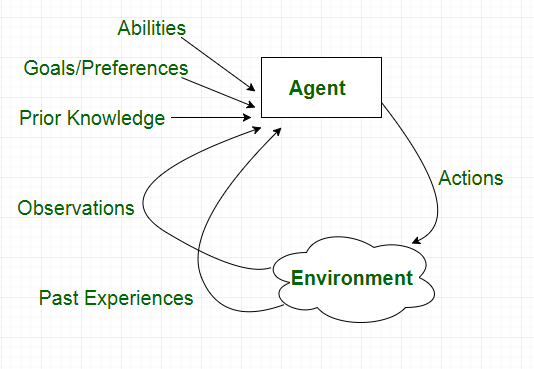

Modelbased reflex agents represents the current state based on history Goalbased agents They are proactive agents and works on planning and searching Utilitybased agents Have extra component of utility measurement over goalbased agent;In artificial intelligence, an intelligent agent (IA) is anything which perceives its environment, takes actions autonomously in order to achieve goals, and may improve its performance with learning or may use knowledgeThey may be simple or complex — a thermostat is considered an example of an intelligent agent, as is a human being, as is any system that meets the definition, such as a firmLike the ModelBased Agents, GoalBased agents also have an internal model of the game state Where as ModelBased Agents only need to know how to update their internal model of the game state using new observations, Goalbased agents have the additional requirement of knowing how their actions will affect the game state

Ai Class Test Cse Hub

Agents In Artificial Intelligence Understanding How Agents Should Act

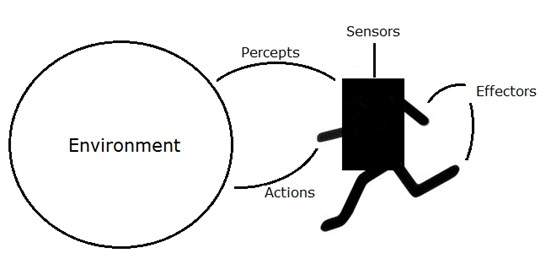

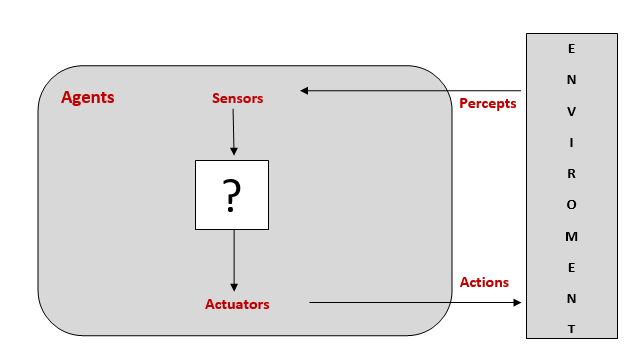

Reinforcement learning is often explained with the term "agent" in the loop The agents stands for the module of the system who takes the decision The policy of the agent is equal to the decision making process In the easiest form a policy looks similar to a behavior tree Other policies are defined with qtable (qlearning) which is an ifthenmatrix If a certain state isA rational agent is an agent that for every situation selects the action the maximizes its expected performance based on its perception and builtin knowledge Definition depends on – Performance measure (success criterion) – Agent's percept sequence to date – Actions that the agent can perform – Agent's knowledge of the environment This means that an agent can be n artificial intelligence, an intelligent agent (IA) is an autonomous entity which acts, directing its activity towards achieving goals (ie it is an agent), upon an environment using observation through sensors and consequent actuators (ie it is intelligent)An intelligent agent is a program that can make decisions or perform a service based on its environment, user input and

Types Of Agents In Artificial Intelligence

Agents In Artificial Intelligence Coding Ninjas Blog

From the simplest to the most sophisticated intelligent actions, almost all intelligent systems are based on one or several of the following agent programs simple reflex agents, modelbased reflex agents, goalbased agents, utilitybased agents and learning agents Simple reflex agentsthis program constitute the basic algorithm needed to initiate some action The exampleUtilitybased Agents Definition, Interactions & Decision Making Goalbased Agents Definition & Examples Simple Reflex Agents Definition, Uses & Examples Decision Making WithoutFor the reflex agent, on the other hand, we would have to rewrite a large number of condition–action rules Of course, the goalbased agent is also more flexible with respect to reaching different destinations Simply by specifying a new destination, we can get the goalbased agent to come up with a new behavior

Intelligent Agent Wikiwand

2

Modelbased Reflex Agents 22 Goalbased Agents •Goal information guides agent's actions (looks to the future) •Sometimes achieving goal is simple eg from a single action •Other times, goal requires reasoning about long sequences of actions •Flexible simply reprogram the agent by changing goals 23 Goalbased Agents 24 Utililtybased 1Simple reflex agents The simplest kind of agent is the simple reflex agent It responds directly to percepts ie these agent select actions on the basis of the current percept, ignoring the rest of the percept history An agent describes about how the condition action rules allow the agent to make the connection from percept to action Condition action rule if Agents in Artificial Intelligence Artificial intelligence is defined as the study of rational agents A rational agent could be anything that makes decisions, as a person, firm, machine, or software It carries out an action with the best outcome after considering past and current percepts (agent's perceptual inputs at a given instance) An AI system is composed of an agent

Utility Based Agents Definition Interactions Decision Making Video Lesson Transcript Study Com

Agents In Artificial Intelligence Geeksforgeeks

Intelligent Agents Intelligent Agent Agent entity in a program or environment capable of generating action An agent uses perception of the environment to make decisions about actions to take The perception capability is usually called a sensor The actions can depend on the most recent perception or on the entire history (percept sequence)A modelbased reflex agent is an intelligent agent that uses percept history and internal memory to make decisions about the ''model'' of the world around it Also Know, what is goal based agent?Goal Based Reflex Agent # Artificial Intelligence Online Course Lecture 6

1

Intelligent Agents 2 The Structure Of Agents 2 3 Structure Of An Intelligent Agent 1 Till Now We Are Talking About The Agents Behavior But How Ppt Download

A Goal Based Agent takes decisions based on how far they are currently from reaching their goals A goal is nothing but the description of a desirable situation Every agent intends to reduce their distance from the goal This allows the agent an option to choose from multiple possibilities for selecting the best route in order to reach the goal stateLink for Simple reflex agents https//wwwyoutubecom/watch?v=KZFfbebQPAU&t=218sLink for Model Based Agents https//wwwyoutubecom/watch?v=xKxh3fQwU8E&t=1A modelbased reflex agent should maintain some sort of internal model that depends on the percept history and thereby reflects at least some of the unobserved aspects of the current state Percept history and impact of action on the environment can be determined by using internal model It then chooses an action in the same way as reflex agent An agent may also use models to

Pdf N Utility Based Decision Making Using Multi Agent Technology International Journal Of Artificial Intelligence And Agent Technology Vol 1 Issue 1 April

Seg 4560 Computational Intelligence For Decision Making Chapter

Although the goalbased agent does a lot more work that the reflex agent this makes it much more flexible because the knowledge used for decision making is• The ability to make decisions then chooses an action in the same way as the reflex agent Goalbased agents Goalbased agents further expand on the capabilities of the modelbased agents, by using "goal" information Goal information describes situations that are desirable This allows the agent a way3 Goal – based agents 4 Utility – based agents 1 Simple reflex agents These agents select actions on the basis of the current percept, ignoring the rest of the percept history Example The vacum agent whose agent function is tabulated in figure (3) is a simple reflex agent, because its decision is based only on the current location

Types Of Ai Agents Javatpoint

Goal Based Agents

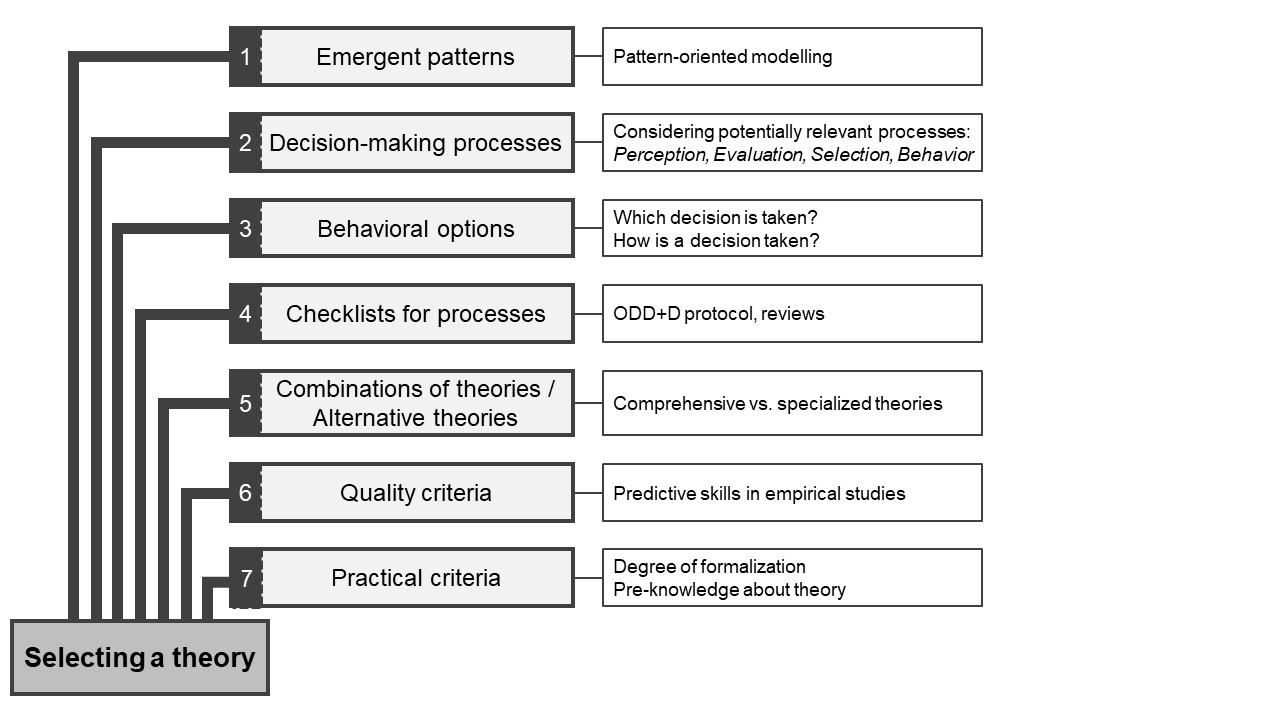

(table lookup, simple reflex, goalbased, or utilitybased) Give a detailed explanation and justification of your choice The patterns that the agent uses are matched against sets of events that occur over time Therefore, the agent needs to maintain knowledge of the past, and, thus, cannot be either a table lookup or simple reflex agent

Section 02

Agents In Artificial Intelligence Geeksforgeeks

Intelligent Agents 2 The Structure Of Agents 2 3 Structure Of An Intelligent Agent 1 Till Now We Are Talking About The Agents Behavior But How Ppt Download

Analysis Of Intelligent Agents In Artificial Intelligence Iiec Business School

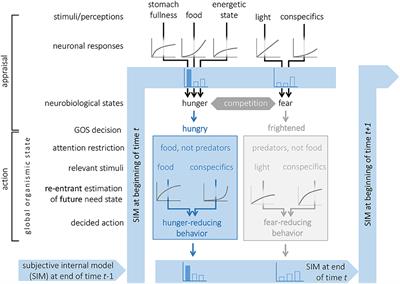

Formalising Theories Of Human Decision Making For Agent Based Modelling Of Social Ecological Systems Practical Lessons Learned And Ways Forward Socio Environmental Systems Modelling

Solved From The Five Type Of Agent Simple Reflex Agent Chegg Com

What Is A Goal Based Agent In Ai The Polymath Blog

Frontiers Decision Making From The Animal Perspective Bridging Ecology And Subjective Cognition Ecology And Evolution

Types Of Agents

Agents In Artificial Intelligence Geeksforgeeks

Agents

Types Of Ai Agents Javatpoint

2

An Introduction To Ai In Test Engineering

Intelligent Agents In Artificial Intelligence Engineering Education Enged Program Section

Artificial Intelligence In Game Design Intelligent Decision Making And Decision Trees Ppt Download

Intelligent Agent Wikipedia

Chapter 2 Intelligent Agent Agents An

Intelligent Agents 2 The Structure Of Agents 2 3 Structure Of An Intelligent Agent 1 Till Now We Are Talking About The Agents Behavior But How Ppt Download

Pdf N Utility Based Decision Making Using Multi Agent Technology International Journal Of Artificial Intelligence And Agent Technology Vol 1 Issue 1 April

Types Of Ai Agents Javatpoint

Artificial Intelligence Designing Agents By Aditya Kumar Datadriveninvestor

2

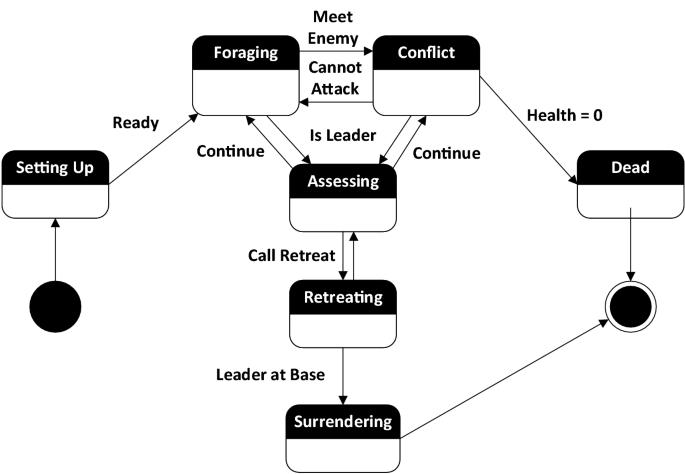

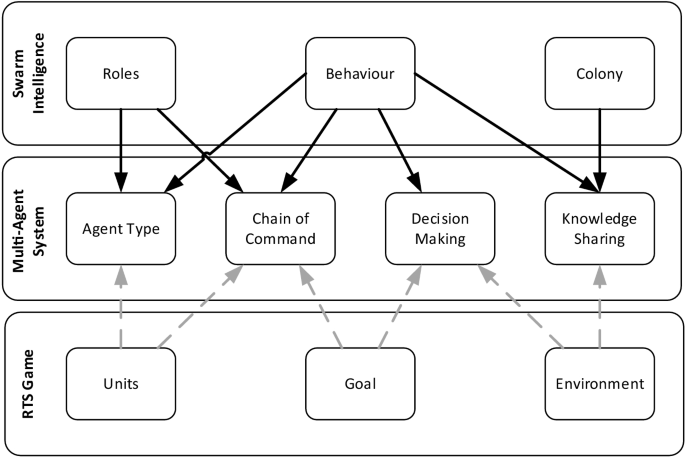

Chain Of Command In Autonomous Cooperative Agents For Battles In Real Time Strategy Games Springerlink

Types Of Agents In Artificial Intelligence

Agents In Artificial Intelligence Geeksforgeeks

Artificial Intelligence

1

Solved 10 Points What Is The Difference Between A Chegg Com

Agents In Artificial Intelligence Geeksforgeeks

Copy Of 2 Goal Based Agent Edited Goal Based Agent What Is A Goal Based Agent Expansion Of Studocu

Intelligent Agents N Agent Anything That Can Be

Structural And Behavioural Characteristics For Mas Ml And Mas Ml 2 0 Agents Download Table

Intelligent Agents 2 The Structure Of Agents 2 3 Structure Of An Intelligent Agent 1 Till Now We Are Talking About The Agents Behavior But How Ppt Download

Ppt Intelligent Agent Powerpoint Presentation Free Download Id

What Is An Utility Based Agent In Ai The Polymath Blog

Agents In Artificial Intelligence Coding Ninjas Blog

1

Ai Agents Environments

Intelligent Agents Agents In Ai Tutorial And Example

Plans For Today N N N Chapter 2

Chapter 2 Intelligent Agent Agents An

Section 02

Intelligent Agents 2 The Structure Of Agents 2 3 Structure Of An Intelligent Agent 1 Till Now We Are Talking About The Agents Behavior But How Ppt Download

Agents In Artificial Intelligence Geeksforgeeks

Topics In Ai Agents

Ai Agents Environments

Goal Based

2

Section 02

Types Of Ai Agents Javatpoint

Types Of Ai Agents Javatpoint

Model Based Agents Definition Interactions Examples Video Lesson Transcript Study Com

Intelligent Agents In A I Laptrinhx

Agents And Environment Part 2 Structure Of Agents By Rithesh K Kredo Ai Engineering Medium

Artificial Intelligence Tutorial 26 Goal Based Agent Youtube

Intelligent Agents Laptrinhx

Intelligent Agent In Ai Guide To What Is The Intelligent Agent In Ai

Types Of Agents In Artificial Intelligence Skilllx

Artificial Intelligence Agents And Environments By Shafi Datadriveninvestor

Goal Based Agents Definition Examples Video Lesson Transcript Study Com

Ai Right Structure Of Agents For Your Business Knoldus Blogs

Chapter 2 Intelligent Agent Agents An

Artificial Intelligence Stanford Encyclopedia Of Philosophy

An Introduction To Ai In Test Engineering

Intelligent Agent Wikipedia

2

Ppt Intelligent Agents Powerpoint Presentation Free Download Id

Chain Of Command In Autonomous Cooperative Agents For Battles In Real Time Strategy Games Springerlink

Intelligent Agents Agents In Ai Tutorial And Example

Intelligent Agents Agents In Ai Tutorial And Example

2

What Is The Difference Between Learning Agents And Other Kinds Of Agents And More Specifically Between Performance Standard And Performance Measure Artificial Intelligence Stack Exchange

Innovation Memes Goal Based Agents

Goal Based Agents

Analysis Of Intelligent Agents In Artificial Intelligence Iiec Business School

Agent Based Approaches For Adaptive Building Hvac System Control Semantic Scholar

Intelligent Agent Wikipedia

Importance Of Intelligent Agents In Ai Tanuka S Blog

Intelligent Agents Chapter 2 Oliver Schulte Summer 11

Quiz Worksheet Goal Based Agents Study Com

Intelligent Agent Wikiwand

2

2

0 件のコメント:

コメントを投稿